Software Safety now has a blog! Check

it out.

This site has been listed as the EG3 Editor's Choice in the Embedded Safety

category for February 2004.

eCLIPS gives this site four of five stars in the

September 7th 2004 SAFETY

CRITICAL - DESIGN GUIDE.

Text Search

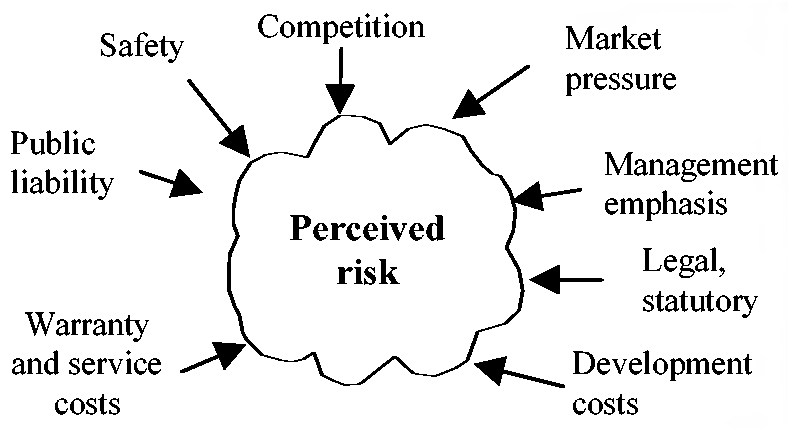

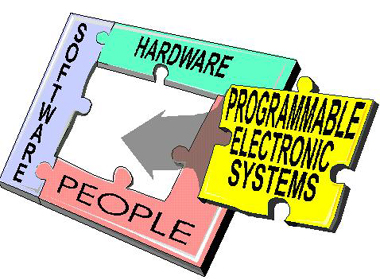

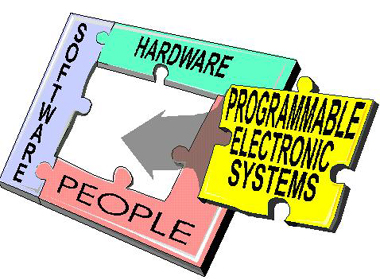

While I concentrate on Software Safety on this site it

is important to note that no software works in isolation.

The entire system must be designed to be

safe. The system contains the software,

hardware, the users, and the environment. All must be given

consideration when developing software. All parts of the

system must be safe.

From Auto Week:

It's getting harder to market safety because newer systems are more

likely to be a software program than something visible.

"If the system is not visible in action, and is only indicated by a

small lamp on the instrument panel, only the smart buyers will

understand how important it is," - Fredrik Arp, CEO of Volvo Car Corp.

IN EMBEDDED SYSTEMS,

SAFETY-CRITICAL IS THE BEST POLICY With the passing of each week,

embedded systems become more pervasive and pervasively connected, with

even the most remote device dependent to some degree on the

reliability and safety-critical operation of other devices or systems.

To be sure you are building in the right safety-critical features,

read the Technical

Insight by David Kalinsky from Enea.

A good summary of many of the detailed items found on this site appear in:

--Architecture of safety-critical systems--

It is one thing to know your system is safety-critical; it is another to

know how to deal with it. David Kalinsky explains how to evaluate errors,

categorize them, and safely handle them when they happen.

How do you define Software Quality?

Software Quality is not just how many bugs are removed during testing.

Rather Software Quality is defined as:

- Customer satisfaction of the software product, as defined by

functionality, usability, reliability, performance, instability and

serviceability.

- Customer satisfaction of the software project, as defined by on-time,

on-budget and the contracted system requirements.

The software industry is painfully discovering that the tactics and

techniques that where successful ten years ago for established

companies or even those used last year by new entrepreneurial

start-ups are now failing to deliver expected results. The "Code,

Ship, and the Customer will test" technique - just does not cut it any

more. We have a whole raft of reasons why we do not make

higher quality software:

- It is the users fault

- Our models are wrong

- Our schools are not doing their job

- Our tools are inadequate

- The customer keeps changing their mind

- The schedule was impossible

You and I can do better!

Definition of Terms:

The FDA's

Glossary

of Computerized System and Software Development

Terminology, defines many of the terms used on

this site.

Defect: The difference between the

expectation and the actual results.

Validation and Verification:

Validation and Verification are a set of terms you find

when working with Software Safety. Many people do

not understand how they differ from each other.

These are my working definitions for Validation and

Verification (V&V):

Validation: Have we built the correct

device? Do we meet the customer's

requirements?

Verification: Have we built the device correctly?

Did we find and remove all of the

'bugs'?

Requirements and Specifications:

Clarifying the distinction between the terms

"requirement" and "specification" is

important.

My working definitions for Requirements and

Specifications:

Requirements are a statement of what the

customer wants and needs. Requirements are used for

validation.

Specifications are the documentation of how

the customer requirements are met by the system

design. Specifications are used for

verification.

A requirement can be any

need or expectation for a system or for its software.

Requirements reflect the stated or implied needs of the

customer, and may be market-based, contractual, or

statutory, as well as an organization's internal

requirements. There can be many different kinds of

requirements (e.g., design, functional, implementation,

interface, performance, or physical requirements).

Software requirements are typically derived from the

system requirements for those aspects of system

functionality that have been allocated to software.

Software requirements are typically stated in

functional terms and are defined, refined, and updated

as a development project progresses. Success in

accurately and completely documenting software

requirements is a crucial factor in successful

validation of the resulting software.

A specification

"means any requirement with which a product, process,

service, or other activity must conform." (See

21 CFR§820.3(y).) It may refer to or include

drawings, patterns, or other relevant documents and

usually indicates the means and the criteria whereby

conformity with the requirement can be checked. There

are many different kinds of written specifications,

e.g., system requirements specification, software

requirements specification, software design

specification, software test specification, software

integration specification, etc. All of these documents

establish "specified requirements" and are design

outputs for which various forms of verification are

necessary.

Device failure (21

CFR§821.3(d)). A device failure is the failure

of a device to perform or function as intended, including any

deviations from the device’s performance specifications or

intended use.

The best introduction to Software Safety comes from the Food

and Drug Administration, in their document "General

Principles of Software Validation; Final Guidance for Industry

and FDA Staff".

The FDA's analysis of 3140 medical device recalls conducted

between 1992 and 1998 reveals that 242 of them (7.7%) are

attributable to software failures. Of those software related

recalls, 192 (or 79%) were caused by software defects that were

introduced when changes were made to the software after its

initial production and distribution. Software validation and

other related good software engineering practices discussed in

this guid are a principal means of avoiding such defects

and resultant recalls.

DEFECT PREVENTION:

Software quality assurance needs to focus on preventing

the introduction of defects into the software development

process and not on trying to "test quality into" the software

code after it is written. Software testing is very limited in

its ability to surface all latent defects in software code.

For example, the complexity of most software prevents it from

being exhaustively tested. Software testing is a necessary

activity. However, in most cases software testing by itself

is not sufficient to establish confidence that the software

is fit for its intended use. In order to establish that

confidence, software developers should use a mixture of

methods and techniques to prevent software errors and to

detect software errors that do occur. The "best mix" of

methods depends on many factors including the development

environment, application, size of project, language, and

risk.

"The major goal of software testing is to discover errors

in the software with a secondary goal of building

confidence in the proper operation of the software when

testing does not discover errors. The conflict between

these two goals is apparent when considering a testing

process that did not detect any errors. In the absence of

other information, this could mean either that the software

is high quality or that the testing process is low

quality." -

Structured Testing: A Testing Methodology Using the

Cyclomatic Complexity Metric by Watson and

McCabe.

"Program testing can be used to

show the presence of bugs, but never to show their absence."

- Edsger W. Dijkstra; Technological University Eindhoven, The Netherlands.

Dijkstra's

1972 Turing Award lecture; it can be obtained as a difficult to

read PDF file.

"Late

Inspection and testing are the simplest, most expensive, and least

effective way to find bugs. Before manufacturers adopted modern

quality techniques their main approach to removing defects was to

inspect each product in the last stage of production once it had been

built, but before it was shipped. If the product didn't meet

specifications, then it was either reworked or scrapped--both

expensive options. Late inspection is prone to human error and rarely

finds all defects." --

What

Software Development Projects Can Learn from the Quality Revolution By

Clarke Ching.

B. Beizer in Software Testing Techniques; Van

Nostrand Rheinhold, New York, second edition, 1990,

gives an example illustrating an aspect of software complexity

concerning the number of paths for a section of code. Given

that a section of software has 2 loops, 4 branches, and 8

states, Beizer calculates the number of paths through the code

to exceed 8000.

TIME AND EFFORT:

To build a case that the software is validated requires time

and effort. Preparation for software validation should begin

early, i.e., during design and development planning and

design input. The final conclusion that the software is

validated should be based on evidence collected from planned

efforts conducted throughout the software life-cycle.

MAINTENANCE AND SOFTWARE CHANGES:

Changes made to correct errors and faults in the software are

corrective maintenance. Changes made to the software

to improve the performance, maintainability, or other

attributes of the software system are perfective

maintenance. Software changes to make the software system

usable in a changed environment are adaptive

maintenance.

Cost of Modifications

Do it early in

the design cycle!

"Writing code is not production, it's not always craftsmanship (though it can

be), it's design. Design is that nebulous area where you can add value faster

than you add cost." - Joel

Spolsky.

The Therac-25 incident is the classic example of software done badly. What

was the cost of inadequate software engineering practices? Six human casualties.

ISO 13485:2003 Medical devices : Quality management systems - Requirements for regulatory purposes.

This International Standard specifies requirements for a quality management

system that can be used by an organization for the design and development,

production, installation and servicing of medical devices, and the design,

development, and provision of related services. It can also be used by internal

and external parties, including certification bodies, to assess the

organization's ability to meet customer and regulatory requirements.

I want to thank

John J. Sammarco from the NIOSH Pittsburgh Research

Laboratory branch for his permission to quote from the

presentation slides he provided, from the workshop "Programmable

Electronic Mining Systems: An Introduction to

Safety"; August of

1999.

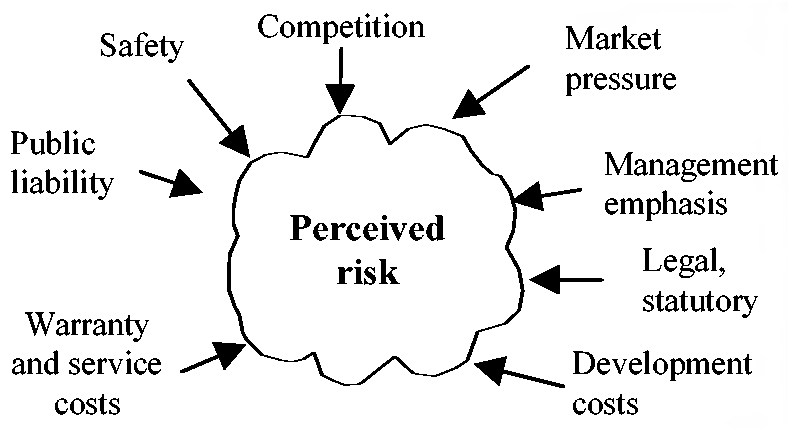

From the

Workshop:

Functional and

operational safety starts at the system level. Safety cannot

be assured if efforts are focused only on software. The

software can be totally free of 'bugs' and employ numerous

safety features, yet the equipment can be unsafe because of

how the software and all the other parts interact in the

system. In other words, the sum can be less safe than the

individual parts!

Thus, a system

approach is needed. How does one address the safety of this

system? Is it done by making the system more reliable,

employing redundancy, or conducting extensive testing? All of

these are necessary but not sufficient to ensure safety!

Making a system more reliable is not sufficient if the system

has unsafe functions. What you could have is a system that

reliably functions to cause unsafe

conditions! Employing redundancy is not sufficient

if both redundant parts are not safe.

Testing alone is

not sufficient for safety. Studies show that testing doesn't

find all the 'bugs' and some systems are too complex to test

every condition.

The key to safety is

to 'design in' safety early in the design by looking at the

entire system, identifying hazards, designing to eliminate or

reduce hazards, and doing this approach over the system life

cycle!

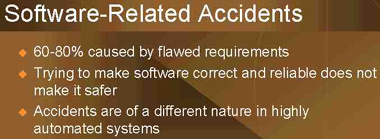

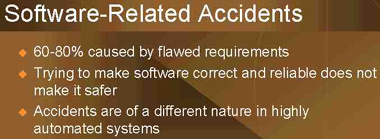

Depending on which

study was being quoted in the Workshop, forty to eighty

percent of all system failures are caused by project

management!

Most causes of system

faults are created before the first line of code is written, or

first schematic is drawn. The errors are caused by

not understanding the requirements of the

system.

Accident

Causes:

One simple way of

understanding the requirements is to ask yourself how you would

test this requirement. If you can not specify a test that

can clearly show the requirement has been met, then the

requirement or the understanding needs

refinement.

Some Safety

Myths:

| Just make it reliable |

Just use redundancy |

| Just do a lot of testing |

Just make the software

"safe" |

| Just do it all in software |

Its always the operator's

fault |

The

earlier in the design cycle that the requirements are

clearly understood the lower the cost of any needed

changes.

Cost of Modifications

Do it early in the design

cycle!

Within the

specification, is there a clear and concise statement

of:

(i)

each safety-related function to be implemented?

(ii) the

information to be given to the operator at any time?

(iii) the

required action on each operator command including illegal or

unexpected commands?

(iv) the

communications requirements between the embedded system and

other equipment?

(v)

the initial states for all internal variables and external

interfaces?

[A big help here is to

use that nitt-pickiest of all programs, Lint, on the variable issue. Also

check out Splint.]

(vi) the

required action on power down and recovery? (e.g. important

data saved in non-volatile memory.)

(vii) each modes and

the initiators of mode transition? (e.g. start-up, normal

operation, shutdown)

[If you go from the

Auto Mode, to the Maintenance Mode, then back to the Auto

Mode, starting over where you left off in the Auto Mode,

could be deadly in some cases.]

(viii) the anticipated

ranges of input variables and the required action on out-of

range variables?

[What happens when you

have channel-to-channel leakage in your A/D because the input

that you are not reading is driving the entire mux

into saturation?]

(ix) the

required performance in terms of speed, accuracy, and

precision?

(x) the

constraints put on the software by the hardware? (e.g. speed,

memory size, word length)

(xi)

internal self-checks to be carried out and the action on

detection of a failure?

Does the software

contain adequate error detection facilities allied to error

containment, recovery, or safe shutdown procedures?

Are safety critical

areas of the software identified?

While you are probably not

designing coal mining equipment, the documents of the System

Safety Evaluation Program give you the procedures that

you need to design a safe system.

These guidelines are based on industry standards such as IEC

61508, and UL 1998. You can download the System

Safety Evaluation Program guidelines for free, unlike

IEC 61508 and UL 1998, to learn what is required to design a

safe system.

"As a result of a number of

accidents and incidents involving mining machinery

utilizing programmable electronics, MSHA and the National Institute for

Occupational Safety and Health (NIOSH) have entered

into a joint effort and developed a set of recommendation

documents for addressing the functional safety of

programmable electronics for mining. The recommendations

are organized to form a risk-based safety framework,

based on a system safety process, that considers the

interfaces and interaction between the mining machinery

hardware, software, human interface, and the operating

environment for the equipment's full life cycle. The

equipment's life cycle includes the stages of design,

certification, commissioning, operation, maintenance and

decommissioning.

These recommendation

documents are intended to provide assistance to

manufacturers and end users of complex mining equipment

incorporating programmable electronics in addressing

hazards associated with the design, operation and

maintenance of their equipment. Equipment applications

where these recommendations are applicable and where a

number of accidents and incidents have occurred include

remote controlled mining equipment, and longwall mining

systems."

I participate in the System

Safety Evaluation Program committee as a

representative of industry. I wrote an introduction

to the System

Safety Evaluation Program for Circuit Cellar Magazine a

few years ago, you can read it here.

Dave Reynolds, VP of marketing for

MTL, sums up the importance

applying system safety guide lines, in

MINING Magazine -

September 2002, Volume 187, No.3.

"There are many potential users of the IEC 61508 who are

not completely aware of the full implications of the

standard," according to Dave Reynolds, VP of marketing for

MTL. "Instrumented

safety systems have typically been designed to established

practice within a specific company," Reynolds explained.

"The benefits of the IEC 61508 standard for users include

applying a scientific approach to specifying and designing

safety systems, quantifying risk and an appropriate

protective system, clearly demonstrating the suitability of

products that meet the standard, and allowing users to

potentially save money by eliminating under- or

over-specification of system components."

MTL, a leader in

intrinsically safe, process I/O products, has launched its

new Safety Related (-SR) Series of products to allow

customers to take advantage of the IEC 61508 standard.

MTL's -SR products meet IEC 61508 standard.

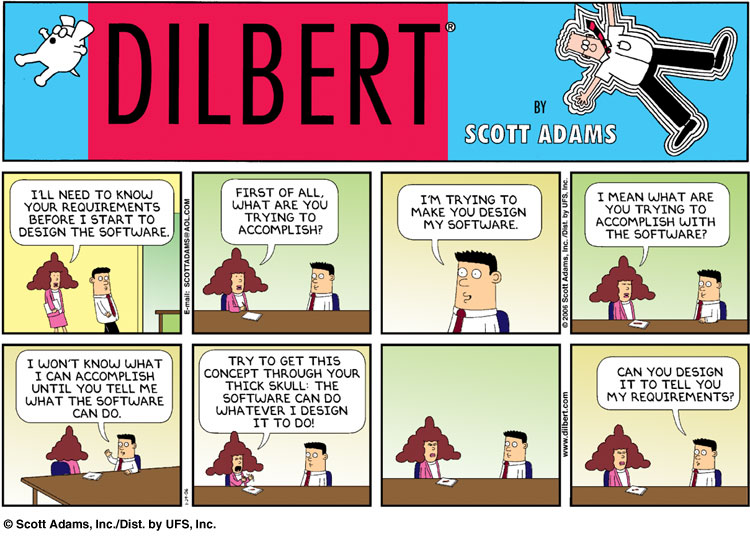

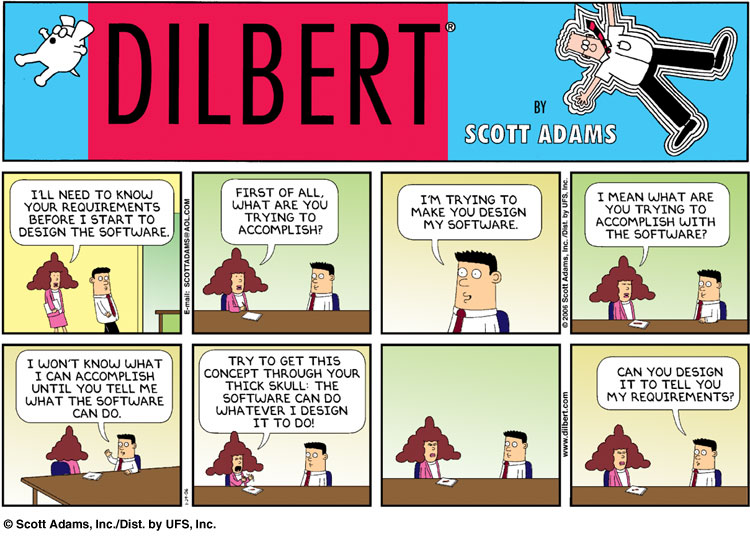

DILBERT: © Scott Adams/Dist. by United Features Syndicate,

Inc.

This Dilbert Moment has been reproduced here with permission, it

may not be reproduced elsewhere without permission.

Any logical engineer knows interruptions are one of the most

effective productivity killers around. Removing cement

brick walls, some thing right out of a Dilbert Cartoon, "To

improve communication between staff members" is not the way to

create better software and products.

LEARNING HOW TO LEAD GEEKS

A Conversation with C2 Consulting's Paul Glen

If you've ever given what you thought was an incredibly passionate

speech to a roomful of unmoved, unblinking developers, you can take

solace in knowing that you're not alone. Indeed, C2 Consulting's Paul

Glen has seen so many similar scenarios throughout his years as a

management adviser that he felt compelled to write a book about them.

Leading Geeks (Jossey-Bass, 2002) does not spend all 253 of its pages

examining uncomfortable silences in meetings, but it does offer plenty

of insight about why working with developers is different than working

with, say, your sales staff (a group of people much more

likely to jump out of their seats and give high-fives all around after

your big speech).

...

That said, there are three key differences between leading developers

and other employees:

...

First, developers are different from other employees. Among those who

choose to become developers, there are common patterns of behavior,

attitudes and values. These patterns influence how one should lead. For

example, developers tend to be more loyal to their technology than to

their company or even project. For most, the technology draws them to a

career in development, rather than an attachment to some specific

industry.

Developers also tend to have what I call the "passion for reason," a

strong sense that all things are or should be completely rational

rather than emotional. So if you're trying to motivate a group of

developers to go out and work hard, the emotional, whip-up-the-passion

approach so common in sales organizations usually falls flat.

Secondly, development work is fundamentally different from other work.

This form of creative work does not conform to the manufacturing model

that most management theories advocate. What you would lead someone to do

significantly affects how you should lead them.

...

Here, the inherent ambiguity of technical work makes traditional

leadership quite hard to do. Since most leaders think that their job

is to tell people what to do, they become quite frustrated by technical

projects, where one of the biggest jobs is to figure out what to do.

In development projects, figuring out what to do is often harder than

doing it.

And finally, power is useless with developers. This is not because

developers are recalcitrant, but because power is about influencing

behavior. But developers deliver most of their value through their

thoughts, not their behavior. Traditional approaches to leadership

are based largely on notions of power and aren't particularly useful

when it comes to developers.

...

Examining the reasons for these failures, one discovers that the

majority of projects fail not due to technical problems, but due to

difficulties in leadership, management, client relationships and

teamwork. In short, human problems doom projects, not technical ones.

...

What tends to motivate developers most of all is engaging work, the

opportunity to learn new things, fair pay and the prospect of a future

filled with more of the same.

...

Further Reading.

You can find information about the FAA Aircraft Certification Service's software programs,

policy, guidance and training at this web-site. It is particularly focused on software

that has an effect on the airborne product (a "product" is an aircraft, an

engine, or a propeller). The Aircraft Certification Service is concerned with

the approval of software for airborne systems (e.g., autopilots, flight

controls, engine controls), as well as software used to produce, test, or

manufacture equipment to be installed on airborne products.

Note: DO-178B is not available for download. For information on

obtaining a copy of DO-178B visit the Radio Technical Commission

for Aeronautics (RTCA) web-site at http://www.rtca.org.

Do178Builder is tailored for production of DO-178B documentation, but is not

limited to that use. DO-178B forces one to ask (and answer) many questions

about the development effort, and this is valuable regardless of whether the

software is targeted for airborne use or not. The answers to the questions are

useful documentation.

U.S. Department

of Transportation/Federal Aviation Administration:

Without their "buy-in" throughout the project life-cycle, they will find

every reason not to use the system, and the project will incur un-needed

costs just to ease their complaints.

19 TIPS FOR AGILE REQUIREMENTS MODELING

I'd like to share some vital tenets that will help to set an

effective foundation for your agile requirements-modeling efforts:

1. "Active stakeholder participation is crucial." Project

stakeholders must be available to provide requirements, to

prioritize them, and to make decisions in a timely manner. It's

critical that your project stakeholders understand this concept

and are committed to it from the beginning of any project.

2. "Software must be based on requirements." If there are no

requirements, you have nothing to build. The goal of software

development

is to build working software that meets the needs of your project

stakeholders. If you do not know what those needs are, you can't

possibly succeed.

3. "Nothing should be built that doesn't satisfy a requirement."

Your system should be based on the requirements, the whole

requirements, and nothing but the requirements.

4. "The goal is mutual understanding, not documentation." The

fundamental aim of the requirements-gathering process is to

understand what your project stakeholders want. Whether or not you

create a detailed document describing those requirements, or perhaps

just a collection of hand-drawn sketches and notes, is a completely

different issue.

5. "Requirements come from stakeholders, not developers." Project

stakeholders are the only official source of requirements. Yes,

developers can suggest requirements, but stakeholders need to adopt

those suggestions.

6. "Use your stakeholders' terminology." Don't force artificial,

technical jargon onto your project stakeholders. They're the ones

doing the modeling -- and the ones the system is being built

for -- therefore, you should use their terminology to model the

system.

7. "Publicly display models." Models are important communication

channels, but they work only if people can actually see -- and

understand -- them. I'm a firm believer in putting models, even if

they're just sketches or collections of index cards, in public

view where everyone can access and work on them.

8. "Requirements change over time." People often don't know what

they want -- and if they do, they usually don't know how to

communicate it well. Furthermore, people change their minds --

it's quite common to hear stakeholders say, "Now that I think

about this some more ..." or "This really isn't what I meant."

Worse yet, the external environment changes -- perhaps your

competitors announce a new strategy, or the government releases

new legislation. Effective developers accept the fact that change

happens and, better yet, they embrace it.

9. "Requirements must be prioritized." Stakeholders must prioritize

the requirements, enabling you to constantly work on the most

important ones and thus provide the most value for their IT

investment.

10. "Requirements only need to be good enough." Not perfect? But

you'll build the wrong thing! Agile developers don't need a

perfect requirements specification, nor do they need a complete

one, because they have access to their stakeholders. Not sure what

a requirement means, because there isn't enough detail? Talk with

your stakeholders and have them explain it; if they can't explain it,

keep talking.

11. "Use simple, inclusive tools and techniques." It's possible,

and in fact desirable, for stakeholders to be actively involved

in modeling. However, stakeholders typically aren't trained in

modeling techniques, nor complex modeling tools. Although one

option is to invest several months to train your stakeholders in

modeling tools and techniques, a much easier approach is to use

simple tools, like whiteboards and paper, and simple modeling

techniques. Simple tools and techniques are easy to teach and are

therefore inclusive because anyone can work with them. Don't scare

people with technology if it's not needed!

12. "You'll still need to explain the techniques -- even the simple

ones." Because people learn best by doing, it's often a good idea to

lead stakeholders through the creation of several examples.

13. "Most requirements should be technology independent." I cringe

when I hear terms such as "object-oriented", "structured" or

"component-based" requirements. These terms are all categories of

implementation technologies and therefore reflect architectural and

design issues.

14. "Some requirements are technical." It's important to recognize

that some requirements, such as the technical constraint that your

system must use the standard J2EE and relational database technologies

employed within your organization, are in fact technology dependent.

Your stakeholders should understand when this is applicable, and why.

15. "You need multiple models." The requirements for a system are

typically complex, dealing with a wide variety of issues. Because

every model has its strengths and weaknesses, no one single model

is sufficient; therefore you'll need several types of models to get

the job done.

16. "You need only a subset of the models." When you fix something

at home, you'll use only a few of the tools in your tool box, such

as a screwdriver and a wrench. The next time you fix something, you

may use different tools. Even though you have multiple tools available,

you won't use them all at any given time. It's the same thing with

software development: Although you have several techniques in your

"intellectual toolbox," you'll use only a subset on any given project.

17. "The underlying process determines some artifacts." Different

methodologies require different requirements artifacts. For example,

the Rational Unified Process and Enterprise Unified Process both

require use cases, Extreme Programming requires user stories and

Feature-Driven Development requires features.

18. "Take a breadth-first approach." It's better to first paint

a wide swath to try to get a feel for the bigger picture, than to

focus narrowly on one small aspect of the system. By taking a

breadth-first approach, you quickly gain an overall understanding

of the system, and you can still dive into the details when

appropriate. Taking an Agile Model-Driven Development approach,

you gain this broad understanding as part of your initial

modeling efforts during "Cycle 0."

19. "Start at your enterprise business model." Some organizations

have what's called an enterprise business model that reflects their

high-level business requirements. If your organization has one, and

it's current, it's a perfect starting place to understand both your

organization and how your system fits the overall picture. You should

be able to identify which high-level requirements your system will

(perhaps partially) fulfill; otherwise, it's a clear sign that either

the model is out-of-date or it's not necessary in your organization.

This column was drawn from Chapter 4 of [Scott W. Ambler's] forthcoming book, The

Object Primer, 3rd Edition: Agile Model Driven Development with

UML 2 (Cambridge University Press, January 2004).

If you can not get full and visible commitment from the highest levels of

the company's management, then initiate and implement process improvement

changes within your sphere of influence.

The ongoing success of you and your team show up on the bottom line, and

almost all mangers will see that as A Good Thing. Once you have the attention

of their wallet you can expect more support...